Email best practices

The definitive guide to A/B tests in your email marketing

Discover what A/B testing is and how your email marketing strategy can benefit from it. Find out the 7 magical rules for A/B testing best practice.

PUBLISHED ON

We spend a lot of time here discussing how you can optimize your email campaigns for your audience. We talk about deliverability, design, marketing best practices... But there is one other important aspect of not only email marketing, but really any kind of marketing – testing.

You wouldn't release a new product without first testing if it works, so why wouldn’t you do the same with emails?

A/B testing in email marketing allows you to test what campaign works best, what message resonates with your audience, or what call-to-action buttons (CTA) generate the most clicks.

So, if you're wondering, "how in the world can I do that?”, worry not – help is on the way. We've compiled the definitive guide to A/B tests in your email marketing.

Table of content

1. Identify the problem

2. Define a hypothesis

3. Test the hypothesis

4. Analyze the test data and draw conclusions

#1: Set goals - Know what you want to test (and why) in your email campaigns

#2: Focus on frequently sent emails

#3: Split your list randomly

#4: Test one element at a time

#5: Wait the right amount of time

#6: Check if results are statistically significant

#7: Test and test again

A/B test subject lines

A/B test images

A/B test copywriting

A/B test calls-to-action

A/B test links

A/B test sending time

What is A/B testing in email marketing?

A/B testing allows you to compare two versions of the same piece of content. You can use it for testing ideas including website copy, paid search ads, and of course marketing and transactional emails.

In the context of email, A/B testing allows you to slightly tweak a part of your emails to test which version generates more opens, clicks, and conversions. For example, does adding an emoji to a subject line increase opens? Does a bright red button (instead of a white button) increase clicks?

Split testing – another common name for A/B testing – varies from simple to complex testing.

Simple A/B testing includes one or two elements which are easy to customize such as two different subject lines, button color and size.

Advanced testing includes the customization of multiple elements in your email campaign like picture placements, overall messaging, personalization, or comparing different email templates against each other.

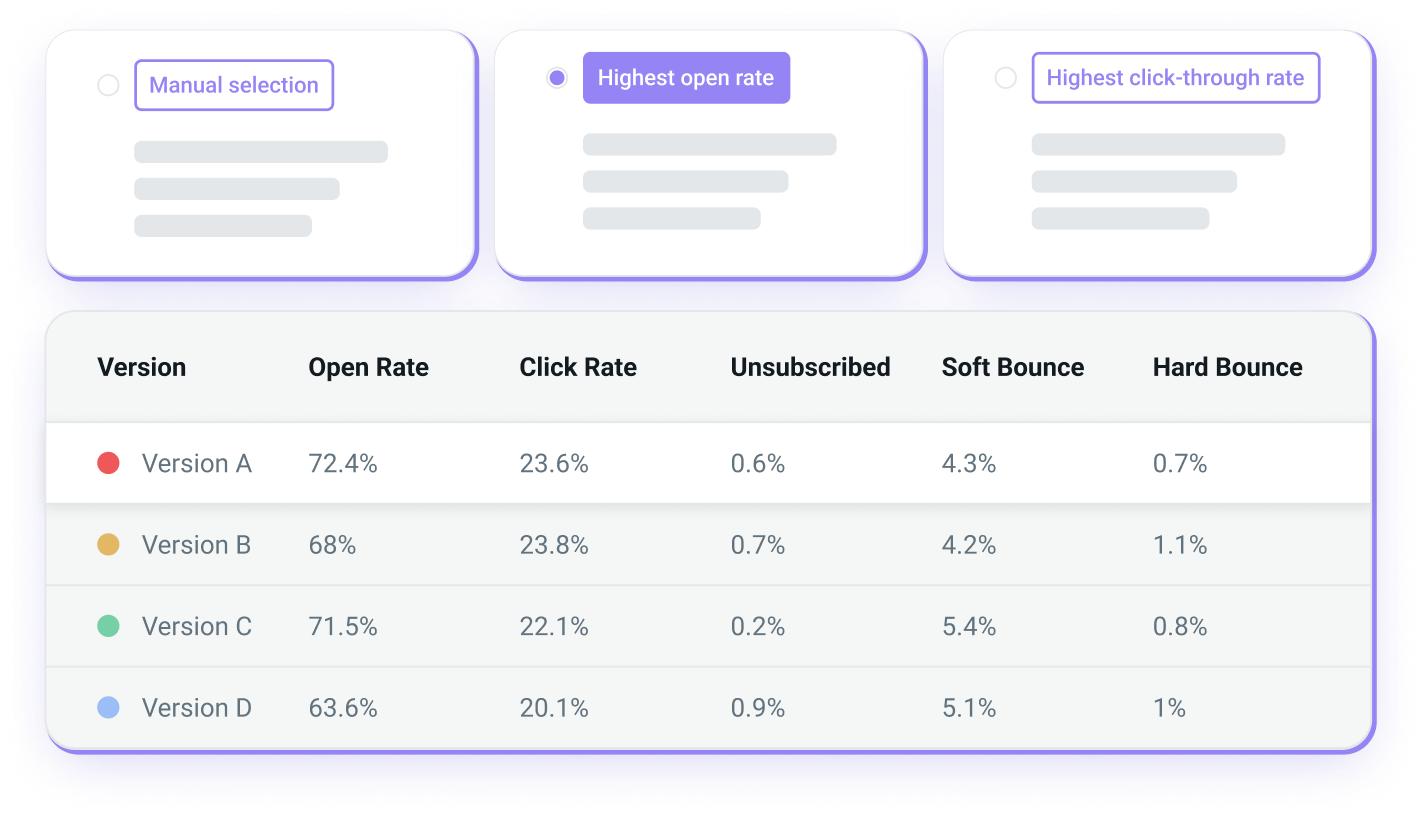

You can do this manually by monitoring performance and sending future campaigns based on your analysis or you can automate the process by utilizing A/B testing tools like Mailjet’s. This type of tool allows you to send different variants of your campaign to a small sample audience before sending the best performing version to the remaining 80% of your list.

Benefits of split testing your email campaigns

To get the best results possible, you need to test and analyze all your email campaigns (marketing email, transactional emails, and email automation workflows). While the cost of acquiring new customers and newsletter subscribers can be high, the incremental cost of improving your email conversion through A/B testing is minimal.

A well-planned split test increases the effectiveness of your email marketing efforts. By using controlled tests, you will figure out which content and visual arrangements work best for your target groups. If you know what works best, then the higher the chance your content will resonate with your audience.

Here are some further benefits of email A/B testing:

Increase open rates – You can experiment with different subject lines, sender names, or preview text to identify what resonates with your audience and optimize accordingly.

Increase click-through rates (CTR) – Again, you can play around with different elements such as content type, length, CTA count, etc., to identify what works for your audience.

Improve conversions – The more subscribers to engage with your content and CTAs, the higher your overall conversions will be.

The good news? Even with small testing and optimization afterwards, the return on investment (ROI) of A/B tests can be massive.

Struggling to get buy-in from the rest of the team around A/B testing? Check out Emily Benoit’s session on building a collaborative email A/B testing culture at Email Camp 2023!

How to run an email A/B test in 4 steps

The best way to run a successful A/B test is to follow a strict process. It will help you to extract deeper insights from your campaigns.

We recommend you include the following steps when setting up your email A/B testing:

1. Identify the problem

Study your email campaign statistics – what could be improved?

Define the user’s behavior and find the problem areas in your conversion funnel to identify areas for optimization. But don’t stop after the first click-through: Include the landing pages your audience reaches after clicking a link in your email.

2. Define a hypothesis

Build a hypothesis based on your analysis. What do you think could be improved and how?

Define which result you expect from which changes. For example, a hypothesis could be:

“My customers do not like to scroll down. Putting the CTA button at the top will increase their attention and result in higher conversion rates.”

Or...

“Most of my readers open my newsletter on their smartphone. Increasing the size of the CTA button will make it easier for them to click on it which will result in higher conversion rates.”

Clearly identify what you’re looking to change and what you think the impact will be before starting the test.

3. Test the hypothesis

Based on your hypothesis, set up the split testing. Create a variation and A/B test it against your current email template.

It’s also important to keep in mind what metrics you’d need to track to measure the success of your test. These metrics should always be related to the hypothesis you are testing.

For example, if you think email opens will improve by adding the sender’s name or company name to your From name, or by personalizing the subject line with the subscriber’s name or editing the preview text, then a good metric to track would be email open rates.

4. Analyze the test data and draw conclusions

Once you've successfully sent out your split email campaign to the defined target groups, now it's time to monitor the results. Which variation performs best?

For example, if you were trying to drive higher open rates and see that a new version has performed better than your previous option, then you’ll know which your winning email is moving forward.

If there is a clear winner, then go ahead with its implementation. If the A/B test results remain inconclusive, go back to step number two, and rework your hypothesis.

A/B testing best practices to boost your email strategy

Following email A/B testing best practices give your experiments a fair chance at accurately testing out your hypothesis. Hopefully, this will lead to improved CTRs, conversion rates, and a more active engagement with your audience.

To help you out, we’ve put together seven key best practices we believe are critical for email A/B testing:

#1: Set goals - Know what you want to test (and why) in your email campaigns

Testing without a specific goal in mind is just wasting time.

Don't randomly select an A/B test for no reason. Understand why you want to use split testing (increase open rates, increase the click-through rate, test new messaging, pricing models) and think about what changes may get you the desired results.

#2: Focus on frequently sent emails

The moment you start conducting A/B tests, you might get carried away and want to test all your email campaigns at the same time.

However, we recommend you stay calm, take a deep breath, and only focus on the emails you are sending most frequently.

#3: Split your list randomly

Choose a smaller, randomized portion of your target audience to test for the most optimized email version before sending the campaign to the rest of your contact list.

To get conclusive results, make sure you choose the same sample sizes.

#4: Test one element at a time

To best be able to identify which variation works best, focus on just one element at a time and leave all other variables the same. For example, create a few different CTA colors, but do not change anything else.

This way you can identify whether an increase in engagement is because of the CTA color. If the color and the text are both tested at the same time, then how can you tell which change drove the most clicks?

#5: Wait the right amount of time

One suggestion here is to look at engagement data from previous campaigns. How long does it take for most of your audience to typically engage with your content? A few hours? A day? You can use that cut off as a yardstick for deciding how long to let your split test run before sending out the rest of your subscribers.

This allows you enough time to gather enough data before making any drastic changes to email content.

#6: Check if results are statistically significant

The struggle with doing email A/B testing is having a large enough sample size. You want enough data to be able to confidently back your hypothesis before rolling out any major changes. In fact, many email service providers (ESPs) monitor this number for you, and even limit testing if your sample size isn’t big enough.

So, if you can’t set up a “percentage winner” try running a 50/50 split test over a single campaign. You can then see which version worked best and apply those changes to future campaigns.

#7: Test and test again

A failed test isn’t necessarily a failure. In fact, the key to A/B testing is to learn from what works and what doesn’t and to continue to iterate based on your results.

After the testing comes more testing. Now you know the best email subject lines, calls-to-action, send time, day of the week to send, and hopefully much, much more. Now, you can try testing different elements and rinse and repeat.

6 A/B test examples to boost your email marketing campaigns

Wondering where to start? We’ve got a few A/B tests you can easily introduce to improve your campaign performance - from design to text related elements.

In fact, to understand which email marketing campaigns perform best, you need to test both elements. You can achieve this with a well-designed email, but what really drives the conversions is the content you provide.

Get started with these A/B test ideas to drive more email engagement.

A/B test subject lines

The very first thing you should test is your subject line. Your hard work may be for naught if your audience does not even open your email. So, make sure you create a subject that encourages them to open your email.

You can try out clear messages (“Our special Christmas offer for you”) or subject lines which are more mysterious (“You really do not want to miss this offer”). You can even play around with emoji's, pre-header texts, personalization to drive clicks.

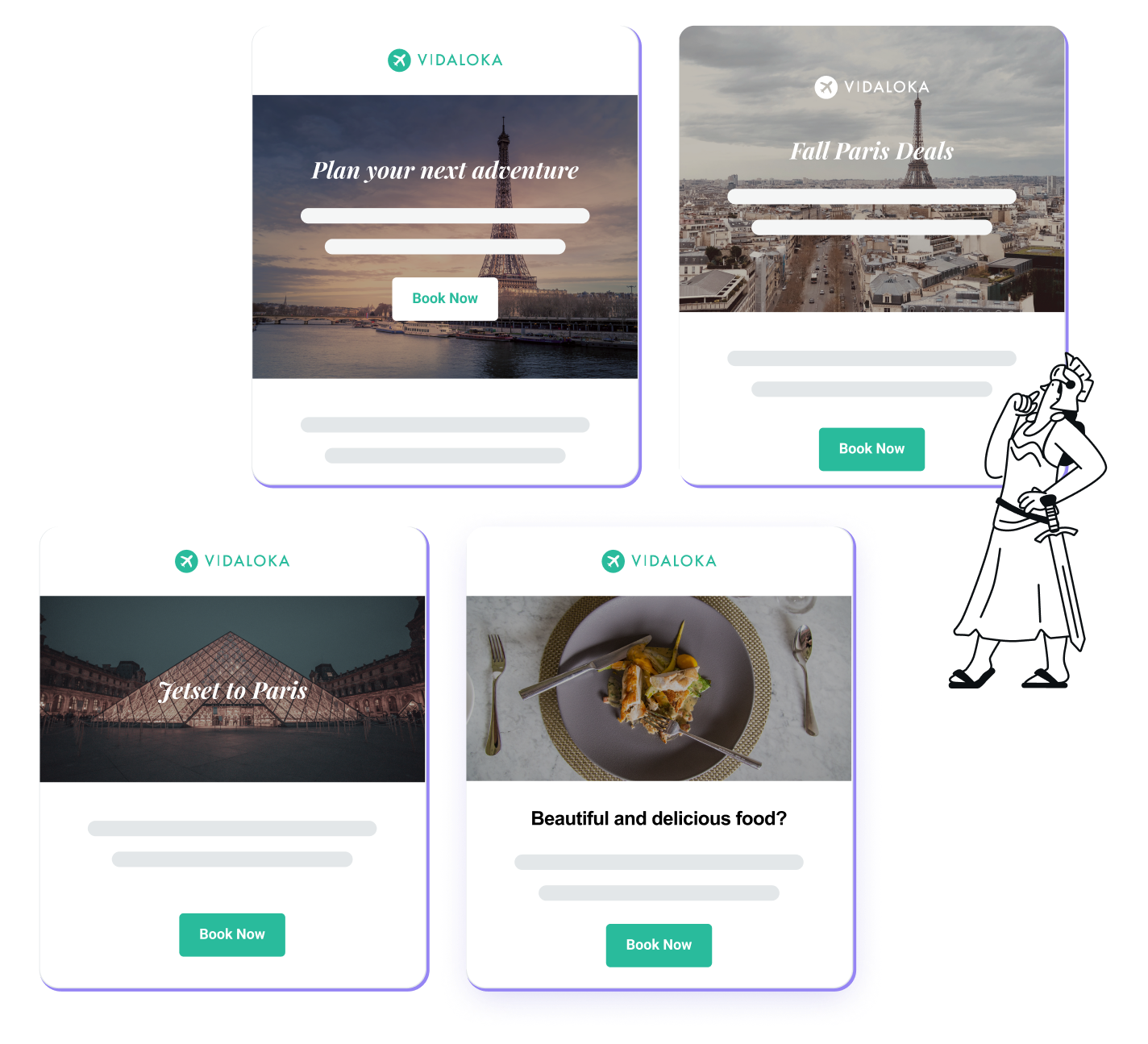

A/B test images

Now that your killer subject line drove a ton of opens, you can now focus on optimizing the content of the email.

This is particularly important for ecommerce brands, where pictures and other visuals will be the first thing that catches the eye of your reader. Try different banners, product pictures, and other captivating images. You could also experiment with GIFs, video previews, and other visuals to drive engagement.

A/B test copywriting

Sometimes, fancy visuals and funky subject lines aren’t enough to convince your audience and your paragraph texts need to win them over.

Try different wording, text length, and placement. Focus on the key messages and wrap the other elements around it.

For example, if you are an online shop, you could test price or different discounts, headings, text sizes, colors, placement. This would be particularly useful around key shopping dates like Black Friday or Valentine’s Day.

A/B test calls-to-action

When your headlines, subtitles, and paragraph text are optimized, then it’s time to look at your calls-to-action (CTA).

CTA buttons are one of the most important elements in a marketing email. This is what it all comes down to. Your mailing is meant to get their attention, but above all, it must generate leads to your website.

So, do not forget this in your testing. Play around with colors, sizes, text, placement etc. Keep in mind that the CTA text also needs to be on point.

A/B test links

Besides CTAs, there are other links you can include and test. An example of these links are social media buttons.

Most brands now include social media buttons in their marketing emails, linking to other channels like Twitter and Facebook. To see how to get the best engagement, test different formats, colors, and sizes.

A/B test sending time

Finally, you’ll want to test the date and time you send your campaigns.

Does your audience prefer to open their emails in the morning, in the evening, during the week or at the weekend? Use your testing to find out and create segments for each to maximize engagement going forward.

Learn more: Don’t know where to begin when it comes to testing send times? We have some pretty good data on the best time to send email newsletters as a starting point for your A/B testing.

Start A/B testing your emails with Mailjet

Now you know everything you need to start email A/B testing your marketing campaign: the elements you should focus on, the best practices, and some examples to help guide your thinking.

So, if you’re ready to remove the guesswork from your email marketing and want to create content that truly resonates with your audience, then give Mailjet a try. The ESP allows you to set the testing criteria of your campaign and pick subsets of your customer list to test up to 10 versions of your campaign.

You can then automatically send the winning version to rest of your subscriber base to increase the engagement from the rest of your campaign. Sign up for a Mailjet account and let’s get the split testing party started.