Marketing

Big Data: What is it and how does it work?

The term Big Data first appeared in the 60’s, but it is taking on a new importance nowadays, find out more about what Big data is and how it works.

PUBLISHED ON

More and more data is being created every day. We are storing more information for each person, and we are even starting to store more information from devices, too. Internet of Things is not something imaginary and really soon even your coffee machine will be tracking your coffee drinking habits and storing them on the cloud. The term Big Data first appeared in the 60’s, but it is taking on a new importance nowadays.

Table of content

1. Integration

2. Management

3. Analysis

Volume

Velocity

Variety

Veracity

Value

Variability

Product Development

Comparative analysis

Customer Experience

Machine Learning

Scalability and predicting failures

Fraud and Compliance

What is Big Data?

Do you know that a jet engine can generate more than 10 terabytes of data for only 30 mins of flying? And how many flights there are per day? That is petabytes of information daily. The New York Stock Exchange generates about one terabyte of new trade data per day. Photo and video uploads, messages and comments on Facebook create more than 500 terabytes of new data every day. That’s a lot of data, right? That’s what we call Big Data.

Big Data is becoming an increasingly inseparable part of our lives. Everyone is using some kind of technology or coming into contact with products and big companies. Those big companies are offering us their data while also using the data we offer to them. They are constantly analyzing that data to produce more efficiently and develop new products.

To really understand Big Data, it’s helpful to know something about its history. By definition, Big Data is data that contains greater variety, arriving in increasing volumes, and finally with ever-higher velocity. That is why when we are speaking about Big Data, we are always talking about the “Big Vs” of Big Data. And there are more than three now, because the concept Behind Big Data evolved.

Data storage nowadays is cheaper than what it used to be a few years ago, and this makes it easier and less expensive to store more data. But why do you need so much data? Well, data can help you with anything - present this data to your customers, use it to create new products and functionalities, make business decisions, and so many more opportunities.

The name Big Data is not that new, but the concept behind handling a lot of data is changing. What we were calling Big Data a few years ago was far less data than it is now. It actually started around the 1960s when the first part of data warehouses was opened.

Forty years later, companies saw how many datasets could be gathered through online services, sites, applications, and any product that customers interact with. This is when the first of the Big Data services started gaining popularity (Hadoop, NoSQL, etc.). Having such tools was mandatory because they are making storing and analyzing Big Data easier and cheaper.

The Internet of Things is no longer only a dream. More devices are connected to the internet, gathering data on customer usage patterns and product performance. And then someone said, “Why not use that to have machines learn by themselves?” - so, machine learning was created and this started generating data, too.

Source: FreeCodeCamp

Can you imagine how much data this is? And on top of that, can you imagine how many uses you can find for all this data? Having this much data will help you make decisions because you have all the information you could ever need. You can resolve any problem or difficulty with ease.

Simply said, Big Data is larger and complex data sets, received especially from new data sources. Those sets are so large that the traditional software used for data processing were not able to manage them easily, so a new set of tools and software were created.

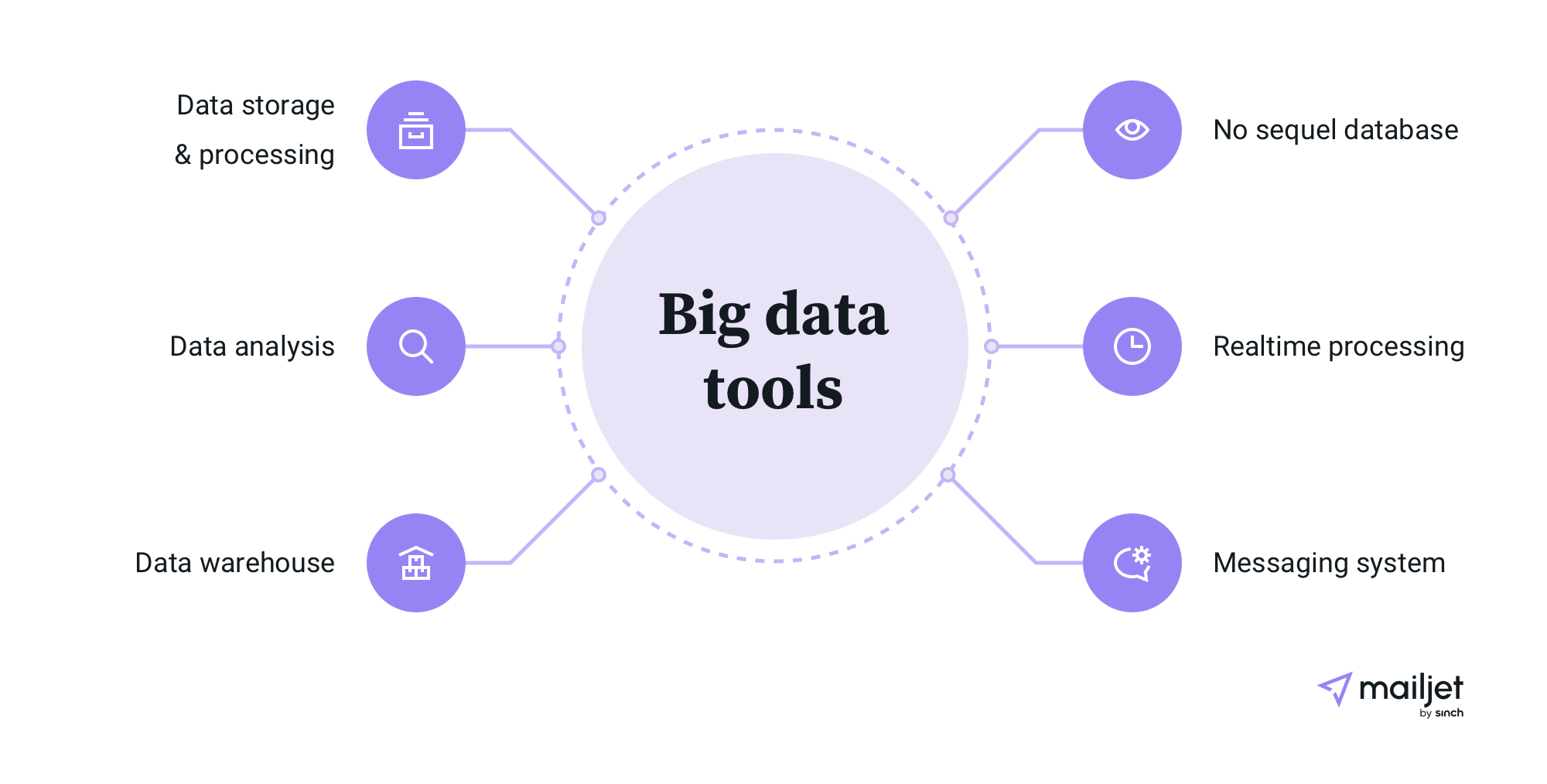

Big Data Tools

As Big Data is something that is always growing, the tools that are meant to be used with it are also always evolving and improving. Tools such as Hadoop, Pig, Hive, Cassandra, Spark, Kafka, etc. are used depending upon the requirement of the organisation. There are so many solutions, and a big part of them are open-source ones. There’s also a foundation - Apache Software Foundation (ASF), that is supporting many of these Big Data projects.

As those tools are really important for Big Data, we are going to say a few words for some of them. Maybe one of the most established ones for analyzing Big Data is Apache Hadoop, which is an open-source framework for storing and processing large sets of data.

Another one that is getting more and more attention is Apache Spark. One of Spark’s strengths is that it can store a big part of the processing data in the memory and on the disk, which can be much faster. Spark can work with Hadoop (Hadoop Distributed File System), Apache Cassandra, or OpenStack Swift and a lot of other data storing solutions. But one of its best features is that Spark can run on a single local machine and this makes working with it so much easier.

Another solution is Apache Kafka which allows users to publish and subscribe to real-time data feeds. The main task of Kafka is to bring the reliability of other messaging systems to streaming data.

Other big data tools are:

Apache Lucene can be used for any recommendation engines because it uses full-text indexing and search software libraries.

Apache Zeppelin is an incubating project that enables interactive data analytics with SQL and other programming languages.

Elasticsearch is more of an enterprise search engine. The best of this solution is that it can generate insights from structured and unstructured data.

TensorFlow is a software library that is gaining more and more attention because it is used for machine learning.

Big Data will continue to grow and change and this means that the tools are going to do the same. And maybe, in a few years, the constructions we are going to use will be completely different. But as we mentioned, some of the tools are working with structured or unstructured data. Let’s see what we mean by that.

Types of Big Data

Behind Big Data, there are three types of data - structured, semi-structured, and unstructured data. In each type there is a lot of useful information that you can mine to be used in different projects.

Structured data is fixed-format and frequently numeric in nature. So, in most cases it is something that is handled by machines and not humans. This type of data consists of information already managed by the organization in databases and spreadsheets stored in SQL databases, data lakes and data warehouses.

Unstructured data is information that is unorganized and does not fall into a predetermined format because it can be almost anything. For example, it includes data gathered from social media sources and it can be put into text document files held in Hadoop like clusters or NoSQL systems.

Semi-structured data can contain both the forms of data such as web server logs or data from sensors that you have set up. To be precise, it refers to the data that, although has not been classified under a particular repository (database), still contains vital information or tags that segregate individual elements within the data.

Big Data always includes multiple sources and most of the time is from different types, too. So knowing how to integrate all of the tools you need to work with different types is not always an easy task.

How does Big Data work?

The main idea behind Big Data is that the more you know about anything, the more you can gain insights and make a decision or find a solution. In most cases this process is completely automated - we have such advanced tools that run millions of simulations to give us the best possible outcome. But to achieve that with the help of analytics tools, machine learning or even artificial intelligence, you need to know how Big Data works and set up everything correctly.

The need to handle so much data requires a really stable and well-structured infrastructure. It will need to quickly process huge volumes and different types of data and this can overload a single server or cluster. This is why you need to have a well-thought out system behind Big Data.

All the processes should be considered according to the capacity of the system. And this can potentially demand hundreds or thousands of servers for larger companies. As you can imagine, this can start to get pricey. And when you add in all the tools that you will need… it starts to pile up. Therefore, you need to know how Big Data works and the three main actions behind it so you can plan your budget beforehand, and build the best system possible.

1. Integration

Big Data is always collected from many sources and as we are speaking for enormous loads of information, new strategies and technologies to handle it need to be discovered. In some cases, we are talking for petabytes of information flowing into your system, so it will be a challenge to integrate such volume of information in your system. You will have to receive the data, process it and format it in the right form that your business needs and that your customers can understand.

2. Management

What else might you need for such a large volume of information? You will need a place to store it. Your storage solution can be in the cloud, on-premises, or both. You can also choose in what form your data will be stored, so you can have it available in real-time on-demand. This is why more and more people are choosing a cloud solution for storage because it supports your current compute requirements.

3. Analysis

Okay, you have the data received and stored, but you need to analyze it so you can use it. Explore your data and use it to make any important decisions such as knowing what features are mostly researched from your customers or use it to share research. Do whatever you want and need with it - put it to work, because you did big investments to have this infrastructure set up, so you need to use it.

As we mentioned when we are talking for Big Data we are always talking about the big Vs behind it. When Big Data appeared there were only 3Vs, but now there are more. And there are always adding more and more depending on what you need the Big Data for. We are going to mention some of the Vs in the next part of the article.

The big Vs behind Big Data

Volume

As the name suggests, Big Data suggests we are talking about high volumes of data. So the amount of data that you receive matters. This can be data of unknown value, such as data on the number of clicks on a webpage or a mobile app. It might be tens of terabytes of data for some organizations, and for others, it may be hundreds of petabytes. Or you may know exactly the source and the value of the data you receive, but still we are talking for big volumes that you are going to receive on a daily basis.

Velocity

Velocity is the big V that represents how fast is the rate at which data is received and treated. If the data is streamed directly into the memory and not written on a disk, it means that the velocity will be higher, and consequently you will operate way faster and provide near real-time data. But this will also require the means to evaluate the data in real-time. Velocity is also the big V that is the most important for fields like machine learning and artificial intelligence.

Variety

Variety refers to the types of data that are available. When you work with so much data, you need to know that a big part of it is unstructured and semistructured (text, audio, video, etc.). It will require some additional processing of the metadata to make it understandable for everybody.

Veracity

Veracity refers to how accurate the data in the data sets is. You can collect a lot of data from social media or websites, but how can you be sure that the data is accurate and correct? Low-quality data without verification can cause issues. Uncertain data may lead to inaccurate analysis and cause you to make bad decisions. As a result, you need to always verify your data and be sure that you have enough accurate data available to have valid and meaningful results.

Value

As we said, not all data collected has value and can be used to make business decisions. It is important to know the value of the data you have at your disposal. And you will have to set in place means to clean your data and confirm that the data is relevant for the current purpose you have in mind.

Variability

When you have a lot of data you can actually use it for multiple purposes and format it in different ways. It is not easy to collect so much data, analyze it and manage it in the right way, so it’s normal to use it multiple times. This is what the variability stands for: the option to use the data for multiple purposes.

We now know a lot about Big Data - what it is, the types of data and the big Vs. But this isn’t really all that useful if we don’t know what Big Data can do and why it’s becoming increasingly important.

Why is Big Data so important?

Big data has a lot of potential. You can use the valuable insights that this data provides for making marketing decisions about your product and brand. Brands that are utilizing Big Data have the ability to make faster and more informed business decisions. Using all the information you have for your customers, you can make your product more customer-centric and create the content that your customer wants or personalize their journeys. Making decisions when you have all the information you need is easier, right?

To give you an example, just think how useful Big Data is in medical research when used to identify how dangerous it could be to contract certain illnesses, depending on some personal medical information or knowing how some diseases should be treated.. This is only one example of the use of Big Data, but it is one of the most important ones.

Something like online dating could become more than 90% accurate once machines learn how to match couples perfectly based on all the information they have for those two people. Any machine failures or crashes can be minimized because you will be aware of under what conditions the failure happen. You can have a car that drives itself and it’s safer than any other car driven by a real person because it doesn’t make human mistakes. It analyzes Big Data information in real time and knows the best route to take to arrive at your destination on time.

Based on all the information they have for their clients, companies can now accurately predict what segments of their customers will want to buy their products and at what time, so they will know the best time to release it. And Big Data is also helping companies run their operations in a much more efficient way.

Big Data is important for the progress of our technology and it can make our lives easier if we use it wisely and for good. The potential of Big Data is endless, so let’s check out some of the use cases.

Big Data uses

Analyzing Big Data can be done by humans and by machines depending on your needs. Using different analytical means, you can combine different types of data and sources to make meaningful discoveries and decisions. Like that, you can release your products faster and target the right audience. Below you can see some of the most common uses of Big Data.

Product Development

When your main business is your product Big Data is more than mandatory for you. Let’s take an example that almost everybody knows - Netflix. How do you think Netflix manages to send you an email with recommendations picked especially for you every week? With the help of Big Data analysis, of course. They use predictive models and inform you of new shows you may like by classifying the data of past and current shows that you watched or marked as a favourite. Other companies are using additional resources such as social media information, store selling information, focus groups, surveys, tests, and much more to know how to proceed when releasing a new product and focus on who to target.

Comparative analysis

When you know how your customers behave and can observe them in real-time, you can compare this to the journeys that other similar products have established and you will know on which points you appear stronger than your competitors.

Customer Experience

The market is so big that it is hard for a product to stand out as unique. So what you can do to distinguish yourself is put effort into personalizing your customers’ experiences. Big data enables you to gather data from social media, web visits, call logs, and other sources to improve the experience of interacting and maximize the value delivered.

Machine Learning

Machine learning is so trendy right now and everybody wants to know more. We are now able to create machines that are learning by themselves and the ability to do so is coming from Big Data and machine learning models that have been developed thanks to it.

Scalability and predicting failures

Knowing at any time how much of your infrastructure you need to mobilize or the possibility to predict mechanical failures is important. It will not be easy to analyze all the data at first because you will be overloaded with structured (time periods, equipment) as well as unstructured data (log entries, error messages, etc.). But by taking all those indications into consideration, you can spot potential issues before the problems happen or scale the use of your resources. With Big Data, you can analyze customer feedback and predict future demands, so you will know when you need to have more resources available.

Fraud and Compliance

Hacking… we all hate it, but it is becoming more and more frequent. Someone is trying to impersonate your brand, someone is trying to steal your data and the data of your clients… And hackers are becoming more creative every day. But the same applies to security and compliance requirements - they are constantly changing. Big Data can help you identify patterns in data that indicate fraud and you will know when and how to react.

Your data analysts can find multiple purposes for your data and find how to connect the different types of data you have. You can use this data for publishing official research and bring more attention to your brand.

Where is Big Data headed in the future?

Big Data is already changing the game in many fields and will undoubtedly continue to grow. Just imagine how much this can change our lives in the future! Once everything around us starts using the Internet (Internet of Things), the possibilities of using Big Data will be enormous. The amount of data available to us is only going to increase, and analytics technology will become more advanced. Big Data is one of the things that is going to shape the future of humanity.

All the tools used for Big Data are going to envolve as well. The infrastructure requirements are going to change. Maybe in the future we will be able to store all the data we need on only one machine and it will have more than enough space. This could potentially make everything cheaper and easier to work with. Big Data is one of the subjects that we at Mailjet are interested in and it is something that we will follow for sure.

If you want to know more on how we are using Big Data and what tools we are using, don’t forget to follow us on Twitter and Facebook to be the first ones to see our next article on the matter.

Big Data: Summing up

Big Data’s reach and possibilities would’ve seemed unthinkable a while back. Nowadays, though, there’s hardly any technological innovation that isn’t ruled by it.

But let’s be honest – understanding what big data is and how it works is no easy feat, no matter how well we’ve tried to explain it. We know it’s a lot to retain, so we’ve summarized the key aspects in a handy infographic below.

***

This is an updated version of the article “Big Data: What is it and how does it work?“, written by Gabriela Gavrailova, and published in Mailjet’s blog on December 3rd, 2019.